Emotions and sentiments are essential aspects of human communication and behavior. They influence our decisions, preferences, and actions, as well as our interactions with others. Understanding and analyzing emotions and sentiments can provide valuable insights for various applications, such as marketing, education, healthcare, entertainment, and social media.

However, emotions and sentiments are not easy to measure and quantify. They are subjective, complex, and context-dependent, and they can be expressed in different ways, such as facial expressions, body language, voice tones, and text. Therefore, traditional methods of emotion and sentiment analysis, such as surveys, questionnaires, and interviews, are often limited, costly, and time-consuming.

This is where artificial intelligence (AI) and computer vision come in. AI and computer vision are the fields of study that aim to create machines and systems that can perceive, understand, and interpret visual information, such as images and videos. By applying AI and computer vision techniques, such as deep learning and natural language processing, we can develop systems that can automatically detect, recognize, and analyze emotions and sentiments from visual data, such as faces, gestures, and texts.

In this article, we will explore the current state and future trends of AI emotion and sentiment analysis with computer vision in 2024. We will cover the following topics:

- What is AI emotion and sentiment analysis with computer vision?

- How does AI emotion and sentiment analysis with computer vision work?

- What are the benefits and challenges of AI emotion and sentiment analysis with computer vision?

- What are the current and emerging applications of AI emotion and sentiment analysis with computer vision?

What is AI Emotion and Sentiment Analysis with Computer Vision?

AI emotion and sentiment analysis with computer vision is the task of using AI and computer vision techniques to automatically detect, recognize, and analyze emotions and sentiments from visual data, such as images and videos.

Emotions are the psychological states that reflect our feelings, moods, and attitudes. They are usually categorized into basic emotions, such as happiness, sadness, anger, fear, surprise, and disgust, or complex emotions, such as boredom, frustration, excitement, and embarrassment. Emotions can be expressed through facial expressions, body language, and voice tones, which can be captured and analyzed by computer vision systems.

Sentiments are the opinions, attitudes, and evaluations that we have towards something or someone. They are usually expressed in text, such as reviews, comments, tweets, and posts, which can be analyzed by natural language processing techniques. Sentiments can be positive, negative, or neutral, or they can be more fine-grained, such as happy, sad, angry, or disgusted. Sentiments can also be influenced by emotions, and vice versa.

AI emotion and sentiment analysis with computer vision can combine both visual and textual information to provide a more comprehensive and accurate understanding of human emotions and sentiments. For example, a computer vision system can analyze the facial expressions and body language of a person who is watching a movie, and a natural language processing system can analyze the text of their review, to determine their overall emotion and sentiment towards the movie.

How does AI Emotion and Sentiment Analysis with Computer Vision Work?

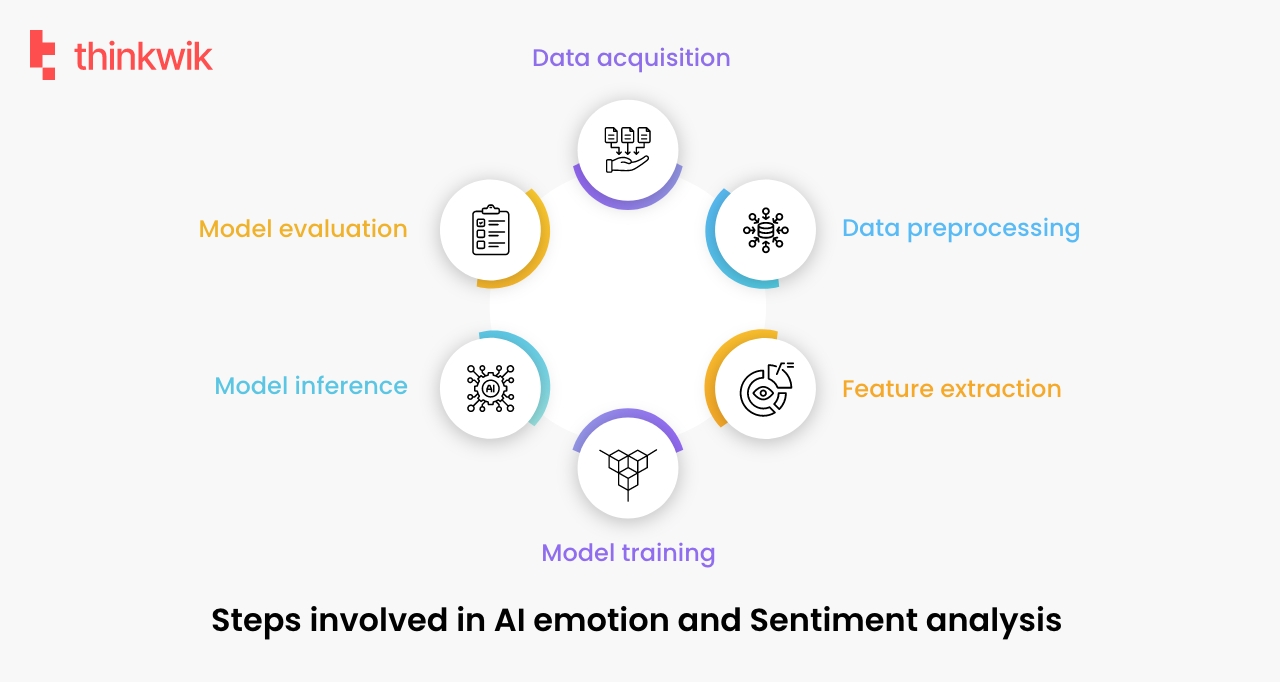

AI emotion and sentiment analysis with computer vision involves several steps and techniques, such as:

- Data acquisition: This is the process of collecting and storing visual data, such as images and videos, that contain emotions and sentiments. The data can be obtained from various sources, such as cameras, webcams, smartphones, social media platforms, and online databases.

- Data preprocessing: This is the process of cleaning and transforming the visual data, such as cropping, resizing, rotating, enhancing, and filtering, to make it suitable for further analysis. The data can also be annotated with labels, such as emotion and sentiment categories, to facilitate the training and evaluation of AI models.

- Feature extraction: This is the process of extracting and selecting the relevant and informative features from the visual data, such as facial landmarks, facial expressions, eye gaze, head pose, body posture, gestures, and text. The features can be extracted using various techniques, such as edge detection, face detection, optical character recognition, and natural language processing.

- Model training: This is the process of building and training AI models, such as deep neural networks, that can learn from the features and labels of the visual data, and perform tasks such as emotion and sentiment classification, regression, or generation. The models can be trained using various algorithms, such as supervised learning, unsupervised learning, or reinforcement learning.

- Model inference: This is the process of using the trained AI models to make predictions or generate outputs for new visual data, such as images and videos, that contain emotions and sentiments. The models can output the probabilities or scores of different emotion and sentiment categories, or they can generate new visual data that reflect certain emotions and sentiments.

- Model evaluation: This is the process of assessing and measuring the performance and accuracy of the AI models, using various metrics, such as accuracy, precision, recall, F1-score, and confusion matrix. The models can also be evaluated based on their robustness, scalability, and interpretability.

What are the Benefits and Challenges of AI Emotion and Sentiment Analysis with Computer Vision?

AI emotion and sentiment analysis with computer vision has many benefits, such as:

- It can provide objective, quantitative, and real-time measurements of emotions and sentiments, which can complement or replace subjective, qualitative, and delayed methods, such as surveys, questionnaires, and interviews.

- It can capture and analyze both explicit and implicit emotions and sentiments, which can reveal the true feelings and opinions of people, even if they are not verbally expressed or intentionally hidden.

- It can process and analyze large-scale and diverse visual data, such as images and videos, from various sources and domains, which can provide rich and comprehensive insights for various applications and purposes.

- It can enable and enhance human-computer interaction, human-machine communication, and human-human collaboration, by allowing computers and machines to understand and respond to human emotions and sentiments, and by facilitating empathy and rapport among humans.

However, AI emotion and sentiment analysis with computer vision also faces many challenges, such as:

- It can be affected by various factors, such as noise, occlusion, illumination, pose, expression, culture, context, and individual differences, which can make the detection, recognition, and analysis of emotions and sentiments difficult and unreliable.

- It can raise ethical, legal, and social issues, such as privacy, security, consent, bias, fairness, accountability, and transparency, which can affect the trust and acceptance of AI systems and their users, customers, and stakeholders.

- It can have unintended or undesirable consequences, such as manipulation, deception, discrimination, and emotional contagion, which can harm the well-being, dignity, and rights of people, especially vulnerable groups, such as children, the elderly, and minorities.

What are the Current and Emerging Applications of AI Emotion and Sentiment Analysis with Computer Vision?

AI emotion and sentiment analysis with computer vision has many current and emerging applications across various industries and domains, such as:

- Marketing and advertising: AI emotion and sentiment analysis with computer vision can help marketers and advertisers to understand and influence the emotions and sentiments of their target audiences, and to optimize their campaigns, products, and services. For example, AI systems can analyze the facial expressions and body language of consumers who are watching ads, and the text of their feedback, to measure their emotional and sentimental responses, and to tailor their ads accordingly.

- Education and learning: AI emotion and sentiment analysis with computer vision can help educators and learners to enhance and personalize the learning process and outcomes, and to improve the learning experience and engagement. For example, AI systems can analyze the facial expressions and body language of students who are learning online, and the text of their comments and questions, to assess their emotional and sentimental states, and to provide adaptive feedback and support.

- Healthcare and wellness: AI emotion and sentiment analysis with computer vision can help healthcare providers and patients to monitor and improve the physical and mental health and well-being of people, and to prevent and treat various diseases and disorders. For example, AI systems can analyze the facial expressions and body language of patients who are suffering from depression, anxiety, or dementia, and the text of their conversations and journals, to diagnose their emotional and sentimental conditions, and to provide therapeutic interventions and recommendations.

- Entertainment and media: AI emotion and sentiment analysis with computer vision can help entertainment and media creators and consumers to create and enjoy more immersive, interactive, and personalized content and experiences, and to express and share their emotions and sentiments. For example, AI systems can analyze the facial expressions and body language of gamers who are playing video games, and the text of their reviews and ratings, to evaluate their emotional and sentimental reactions, and to generate or modify the game content and scenarios accordingly.

- Social media and communication: AI emotion and sentiment analysis with computer vision can help social media and communication platforms and users to understand and communicate their emotions and sentiments more effectively and efficiently, and to enhance their social relationships and networks. For example, AI systems can analyze the facial expressions and body language of users who are using video calls, and the text of their messages and posts, to detect and convey their emotional and sentimental cues, and to provide emoticons, stickers, and filters that match their moods.

Developing more advanced and robust AI models and techniques, such as multimodal, cross-modal, and self-supervised learning, that can integrate and leverage multiple sources and types of visual data.

Example 1: A web app that allows users to upload their photos or videos and get feedback on their emotions and sentiments. The app can use AI and computer vision to analyze the facial expressions and text of the users and provide them with a report on their emotional and sentimental states, as well as some tips and suggestions to improve their well-being. The app can also allow users to compare their emotions and sentiments with others, and to share their results on social media.

In summary, AI emotion and sentiment analysis with computer vision revolutionize our understanding of human emotions. This technology, blending artificial intelligence and visual data, offers diverse applications in marketing, education, healthcare, entertainment, and social media. While promising, challenges such as noise and privacy issues need attention. The future holds exciting possibilities with advancements in multimodal learning, cross-modal integration, and self-supervised techniques, promising more accurate and personalized systems.